Django + celery + redis 执行异步任务及查看结果(推荐)

官方文档

- https://docs.celeryproject.org/en/latest/django/first-steps-with-django.html#using-celery-with-django(配置文档)

- https://github.com/celery/celery/tree/master/examples/django(django 例子)

其他文档

- https://www.jianshu.com/p/fb3de1d9508c(celery 相关介绍)

开发环境

- python 3.6.8

- django 1.11

- celery 4.3.0

- django-celery-results 1.1.2

- django-celery-beat 1.5.0

安装 redis

安装操作 redis 库

pip install redis

(这里说明一下,pip 安装的 redis 仅仅是一个连接到 redis 缓存的一个工具;redis 服务需要自己去安装,安装文档如上)

安装 celery

pip install celery

安装 Django-celery-results

pip install django-celery-results

配置 settings.py

# 添加 djcelery APP

INSTALLED_APPS = [

# ...

'django_celery_results', # 查看 celery 执行结果

]

# django 缓存

CACHES = {

"default": {

"BACKEND": "django_redis.cache.RedisCache",

"LOCATION": "redis://127.0.0.1:6379/1",

"OPTIONS": {

"CLIENT_CLASS": "django_redis.client.DefaultClient",

}

}

}

# celery 定时任务

# 注意,celery4 版本后,CELERY_BROKER_URL 改为 BROKER_URL

BROKER_URL = 'redis://127.0.0.1:6379/0' # Broker 使用 Redis, 使用0数据库(暂时不是很清楚原理)

# CELERYBEAT_SCHEDULER = 'djcelery.schedulers.DatabaseScheduler' # 定时任务调度器 python manage.py celery beat

CELERYD_MAX_TASKS_PER_CHILD = 3 # 每个 worker 最多执行3个任务就会被销毁,可防止内存泄露

# CELERY_RESULT_BACKEND = 'redis://127.0.0.1:6379/0' # celery 结果返回,可用于跟踪结果

CELERY_RESULT_BACKEND = 'django-db' # 使用 database 作为结果存储

CELERY_CACHE_BACKEND = 'django-cache' # celery 后端缓存

# celery 内容等消息的格式设置

if os.name != "nt":

# Mac and Centos

# worker 启动命令:celery -A joyoo worker -l info

CELERY_ACCEPT_CONTENT = ['application/json', ]

CELERY_TASK_SERIALIZER = 'json'

# CELERY_RESULT_SERIALIZER = 'json'

else:

# windows

# pip install eventlet

# worker 启动命令:celery -A joyoo worker -l info -P eventlet

CELERY_ACCEPT_CONTENT = ['pickle', ]

CELERY_TASK_SERIALIZER = 'pickle'

# CELERY_RESULT_SERIALIZER = 'pickle'

生成 Django-celery-results 关联表

python manage.py migrate

(joyoo) yinzhuoqundeMacBook-Pro:joyoo yinzhuoqun$ python manage.py migrate

raven.contrib.django.client.DjangoClient: 2019-12-15 21:47:10,426 /Users/yinzhuoqun/.pyenv/joyoo/lib/python3.6/site-packages/raven/base.py [line:213] INFO Raven is not configured (logging is disabled). Please see the documentation for more information.

Operations to perform:

Apply all migrations: admin, auth, blog, captcha, contenttypes, django_celery_results, djcelery, logger, photo, sessions, sites, user, users

Running migrations:

Applying django_celery_results.0001_initial... OK

Applying django_celery_results.0002_add_task_name_args_kwargs... OK

Applying django_celery_results.0003_auto_20181106_1101... OK

Applying django_celery_results.0004_auto_20190516_0412... OK

Applying djcelery.0001_initial... OK

项目根目录添加 celery.py

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

@author: yinzhuoqun

@site: http://zhuoqun.info/

@email: yin@zhuoqun.info

@time: 2019/12/14 17:21

"""

from __future__ import absolute_import, unicode_literals

from celery import Celery

from django.conf import settings

import os

# 获取当前文件夹名,即为该 Django 的项目名

project_name = os.path.split(os.path.abspath('.'))[-1]

project_settings = '%s.settings' % project_name

# 设置环境变量

os.environ.setdefault('DJANGO_SETTINGS_MODULE', project_settings)

# 实例化 Celery

app = Celery(project_name)

# 使用 django 的 settings 文件配置 celery

app.config_from_object('django.conf:settings')

# Celery 加载所有注册的应用

app.autodiscover_tasks(lambda: settings.INSTALLED_APPS)

配置项目根目录 __init__.py

from __future__ import absolute_import, unicode_literals

# This will make sure the app is always imported when

# Django starts so that shared_task will use this app.

from .celery import app as celery_app

import pymysql

pymysql.install_as_MySQLdb()

__all__ = ('celery_app',)

app 目录添加 tasks.py

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

@author: yinzhuoqun

@site: http://zhuoqun.info/

@email: yin@zhuoqun.info

@time: 2019/12/15 12:34 AM

"""

import json

import requests

from celery import task

from django.core.mail import send_mail

@task

def task_send_dd_text(url, msg, atMoblies, atAll="flase"):

"""

发送钉钉提醒

:param url:

:param msg:

:param atMoblies:

:param atAll:

:return:

"""

body = {

"msgtype": "text",

"text": {

"content": msg

},

"at": {

"atMobiles": atMoblies,

"isAtAll": atAll

}

}

headers = {'content-type': 'application/json',

'User-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:22.0) Gecko/20100101 Firefox/22.0'}

r = requests.post(url, headers=headers, data=json.dumps(body))

# print(r.text)

@task

def task_send_mail(*args, **kwargs):

"""

django 的 发送邮件,支持 html,html_message="html 内容"

:param args:

:param kwargs:

:return:

"""

send_mail(*args, **kwargs)

views.py 调用

# 假如 url 设置成 test

def test(request):

# 导入

from .tasks import task_send_dd_text

# 执行

task_send_dd_text.delay(settings.DD_NOTICE_URL, "异步任务调用成功", atMoblies=["18612345678"], atAll="false")

return HttpResponse("test")

启动 celery worker

# 项目根目录终端执行(joyoo 项目名称)

centos or mac os:celery -A joyoo worker -l info (centos)

windows: celery -A joyoo worker -l info -P eventlet (可能还需要 pip install eventlet)

# 守护进程

/root/.virtualenvs/blog/bin/celery multi start w1 -A joyoo -l info --logfile=./celerylog.log

centos7 守护 celery worker

Centos7 使用 Supervisor 守护进程 Celery

celery 4.3.0 任务失败重试机制

https://www.freesion.com/article/3302161424/

访问调用 异步任务 的视图

http://127.0.0.1/test

worker 日志

(joyoo) yinzhuoqundeMacBook-Pro:joyoo yinzhuoqun$ celery -A joyoo worker -l info

raven.contrib.django.client.DjangoClient: 2019-12-15 21:52:03,199 /Users/yinzhuoqun/.pyenv/joyoo/lib/python3.6/site-packages/raven/base.py [line:213] INFO Raven is not configured (logging is disabled). Please see the documentation for more information.

-------------- celery@yinzhuoqundeMacBook-Pro.local v4.3.0 (rhubarb)

---- **** -----

--- * *** * -- Darwin-18.6.0-x86_64-i386-64bit 2019-12-15 21:52:04

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: joyoo:0x108f19da0

- ** ---------- .> transport: redis://127.0.0.1:6379/0

- ** ---------- .> results:

- *** --- * --- .> concurrency: 12 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. blog.tasks.task_send_dd_text

. blog.tasks.task_send_mail

[2019-12-15 21:52:04,882: INFO/MainProcess] Connected to redis://127.0.0.1:6379/0

[2019-12-15 21:52:04,892: INFO/MainProcess] mingle: searching for neighbors

[2019-12-15 21:52:05,915: INFO/MainProcess] mingle: all alone

[2019-12-15 21:52:05,931: WARNING/MainProcess] /Users/yinzhuoqun/.pyenv/joyoo/lib/python3.6/site-packages/celery/fixups/django.py:202: UserWarning: Using settings.DEBUG leads to a memory leak, never use this setting in production environments!

warnings.warn('Using settings.DEBUG leads to a memory leak, never '

[2019-12-15 21:52:05,931: INFO/MainProcess] celery@yinzhuoqundeMacBook-Pro.local ready.

[2019-12-15 21:57:45,830: INFO/MainProcess] Received task: blog.tasks.task_send_dd_text[c5c98287-f64d-468c-8889-28ee713a7612]

[2019-12-15 21:57:46,055: INFO/ForkPoolWorker-8] Task blog.tasks.task_send_dd_text[c5c98287-f64d-468c-8889-28ee713a7612] succeeded in 0.22136997600318864s: None

[2019-12-15 23:06:55,806: INFO/MainProcess] Received task: blog.tasks.task_send_dd_text[8511b870-d1b7-4540-bd20-ce1db206e81c]

[2019-12-15 23:06:56,060: INFO/ForkPoolWorker-9] Task blog.tasks.task_send_dd_text[8511b870-d1b7-4540-bd20-ce1db206e81c] succeeded in 0.25004262900620233s: None

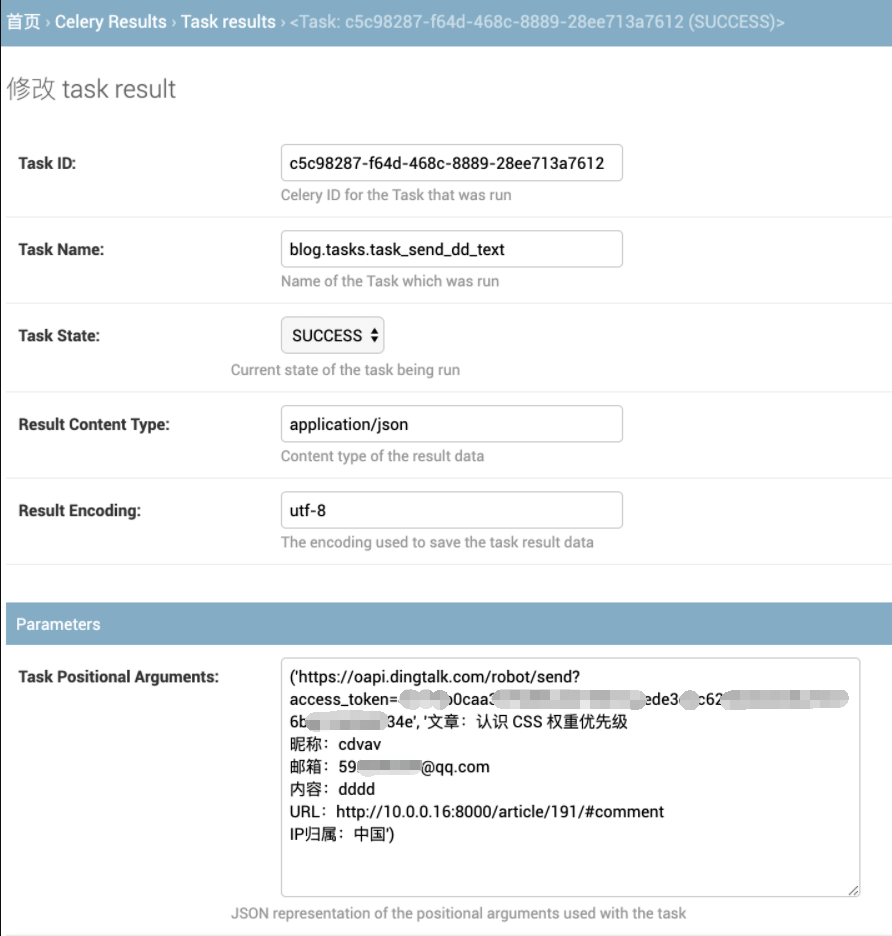

Django 后台查看 celery 异步任务结果

文章部分资料可能来源于网络,如有侵权请告知删除。谢谢!

前一篇: python3 不小心 卸载了 pip,重装 pip 办法

下一篇: celery 报错: Refusing to deserialize untrusted content of type pickle (application/x-python-serialize)